Janet Gregory’s and Lisa Crispin’s book “Agile Testing”, which challenged the traditional view on testing sparked a discussion in the testing community that seemed to have lasted ever since the book was published. Before this discussion, we had the issue of the sequential approach to testing, which often resulted in the dreaded “testing crunch” (the development phase taking longer than planned and the testing phase taking the hit by having all testing being crammed into a very narrow time slot). And I bet that quite a few people have heard about testing zombies. If not, here’s a good intro. So, this is a good question, and I’ll try to answer it by listing good behaviors that I have witnessed throughout the years.

A Good Tester Learns the Domain

If anyone on the team should know the domain and business rules, it’s the tester. Not only does a tester need to make sure that the team’s product implements the business rules and does what it’s supposed to, the tester also has the most exposure to the domain and time to get to know it. Figuring out interesting test cases in complex domains requires both testing skills and deep domain knowledge, which is best acquired by studying the domain through books, blogs, or other media, and talking to the product people and customers. One of my best team onboarding experiences was that of being brought up to speed by the team’s tester. She knew everything about the intricacies of realtime trading from the domain point of view.

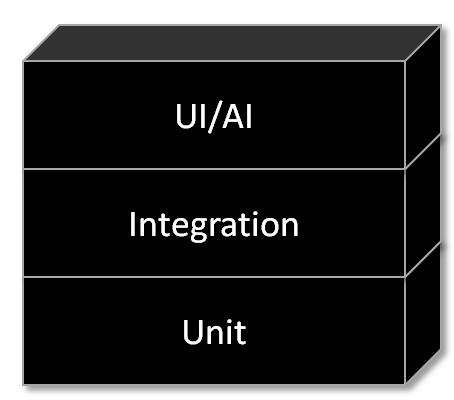

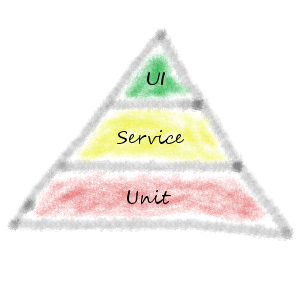

A Good Tester Keeps Track of Tools

In my mind a good tester knows quite a lot about tools. An average tester doesn’t need to, but a good one does. The good tester knows what testing frameworks the team employs, what they do, and what they don’t cover. Let’s take a simple example. If the team has its unit testing in order by using a unit testing framework with some test double library and has a number of end-to-end tests using WebDriver, the good tester will look into performance testing frameworks or suggests that test data be managed better, or that the team should look more into integration testing. The good tester is by no means an automation person, but wants to understand the tools used in the development process actually do and don’t.

A Good Tester Leads the Team’s Exploratory Testing

Exploratory testing isn’t a random walk of the system’s features. It’s a rather systematic approach, especially if it involves the entire team. Therefore it needs to be planned and coordinated. If you’re familiar with James Whittaker’s book “Exploratory Testing”, you know that there are many approaches to testing your application. A good tester should know about those and take on the responsibility of ensuring that the team makes exploratory testing happen: What tours do we run? Which do we skip, or do later? How do we document the session? What do we do with the results?

A Good Tester Uses and Teaches Testing Techniques

I have yet to read a captivating and intriguing book on software testing. Still, these things actually do contain descriptions of testing techniques. At the end of the day, these constitute the core of the testing craft. Developer testing incorporates some of them: boundary value analysis and equivalence partitioning, truth tables, state diagrams, and pairwise testing. There’s more, though, and even the average testers should know them in their sleep, not to mention the good testers.

A Good Tester Acts as the Default Spokesperson for Quality

“Default spokesperson.” What does that even mean? It refers to the person who always takes the quality stance; in planning meetings, in architecture workshops, near the coffee machine. It’s the one who asks questions like:

- How do we know that the users will like it?

- How do we test this?

- Have we thought of everything?

- Will this new feature slow down the system?

- Doesn’t this new feature clash with existing business rules?

- Can this be automated, and how?

- Won’t this change add to out technical debt?

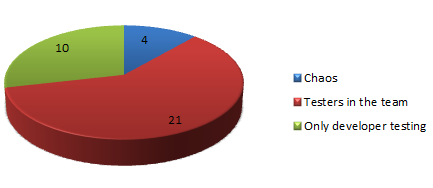

A Good Tester Ensures that Everybody is a Quality Champion

I have never seen laying the burden of ensuring overall quality on one or a few persons work. In day to day development work having dedicated testers or test automation engineers, who are responsible for “testing” or “quality” (whatever those are, by the way), usually results in developers throwing work over the wall: the test automation engineer is supposed to “automate tests” and the tester is supposed to “test.” Nobody benefits from this in the long run. Knowing this, a good tester encourages and invites everybody to take responsibility for quality.