I’ve always cared about the quality of my code. However, I didn’t always have the necessary tools to achieve it. For the first 6-7 years (of hobby programming in high school and during my university studies) I had to resort to what we call “manual checking” nowadays. Then my professional career began and I got exposed to different types of organizations and their ways of working.

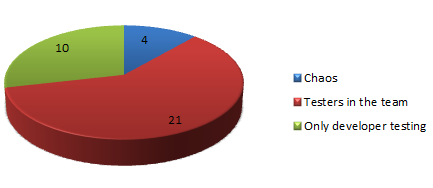

Fast forward 15 years. By now I had worked in, or closely to, roughly 25 teams and I could see some patterns emerge. Basically, I’ve encountered three ways of working with quality assurance, and I’ve seen traces of a fourth. This is what I’ve found.

Cowboy/chaos teams: I’ve encountered these teams in small organizations or as guerilla teams operating under the corporate radar. Such teams have neither testers nor anything that resembles quality assurance. Their testing amounts to manual checking performed by the developers in conjunction with a release or if one of their rather frequent bugs is fixed. Such teams start out as very fast, but the code they produce crumbles under its own weight after a few months. Fortunately, the teams I’ve observed have worked in areas where bugs weren’t that much of a problem: nobody would get injured and they wouldn’t make the newspapers either. Bugs would “only” make the customers unhappy.

Teams with testers on the team: My experience of such teams ranges from newly formed teams struggling to understand how to work in a cross-functional iterative and incremental manner, to rather well-oiled ones. Unfortunately the first category has been dominating. In organizations that have practiced a strict division of labor, i.e. separate development and QA, forming cross-functional teams isn’t an easy task. The mini waterfall iteration is a common anti-pattern, and it’s a result of old habits: Developers still throw untested code over the wall (although, the wall isn’t there anymore), and testers keep compensating for an inferior development process by just checking that the developers haven’t made any major boo boos. There isn’t any time to do more than that, since all testing is crammed into the last two days of the iteration.

Code quality may also be an issue in teams that are just starting out as agile/cross-functional. Back in the old days, they released once every few months and took the occasional pain of integration hell and manual regression testing. This way of working has left them unprepared for frequent deliveries, which in turn requires skills in unit testing, refactoring, and continuous integration and deployment.

The teams that I’ve seen that have mastered the basics of the above development techniques have been addressing the challenge of making agile testing truly work: make the testers proactive instead of reactive, plan all testing activities properly during iteration planning meetings, pair test/program, automate manual checks, and perfect exploratory testing.

Teams that only rely on developer testing: I’ve also been able to work in teams that relied solely on developer testing. In such teams pretty much all checks were automated and they’ve had thousands of unit tests, and hundreds of integration and end-to-end tests. They were also proficient in refactoring and continuous delivery. My experience is that these teams only lacked good exploratory testing and sometimes specialized types of testing, like usability or security testing. Given that they had a good product owner (and they were able to foster them to some extent too), the worst mistake they would do was to produce something less aesthetically appealing or not 100% user-friendly.

I don’t have enough evidence, but I believe that the difference between chaotic cowboy teams and teams that to developer testing lies in the mission criticality of their product. The teams that I have experience of that did developer testing all worked on software that had to be correct. In the absence of a set of QA activities and people that would perform them, they self-organized into embracing developer testing.

Organizations with separate QA and development: Despite more than 15+ years in the industry, I haven’t seen the true waterfall setup up close. However, I’ve seen traces and residues of it when working in banking and the travel industry. To be fair, we have to acknowledge that banks and flight booking usually work, so obviously it works to separate development and testing. Then again, the code in these systems is really hard to change and few people dare to touch it, much less refactor or delete something. This is a result of hand-written test protocols, rather than test code, I believe.

Given this experience, I’m convinced that you should put your money in the developer testing basket. This is why:

- Teams that do only developer testing can improve with good testers, but manage without

- Teams that aim to become cross-functional and good at agile testing will be helped by developer testing practices, since they’ll make their code better and free up their testers’ time

- Chaos teams that do cowboy coding have a fighting chance to improve their code and quality if they engage in developer testing

As always, your mileage may vary, but my unscientific study of 25 teams in banking, the travel industry, gaming, the public sector, and directory services has pointed me in the direction of developer testing. I’d love to hear your stories, examples, and counter examples.