A few days ago, I listened to Gojko Adzic’s talk “Humans vs Computers: five key challenges for software quality tomorrow” on Jfokus. It was a great talk and it really gave some food for thought. This summary will not do it justice, but basically the plot was that our software is now being used by other software, and there’s AI, and voice recognition and a mix of all this will (and already does) cause new kinds of trouble. Not only must we be prepared for the fact the “user” is no longer human; we must also take into account new edge cases, such as twins for facial and voice recognition, and the fact that our software may stop working because someone else’s does. All in all, the risk is rightfully shifted towards integration and to handle it, we need to turn to monitoring of unexpected behavior. This made Mr. Adzic propose that we do something about the test automation pyramid. Turn it upside down maybe?

Personally, I vote for the test automation monolith :), or rectangle. I’ll tell you why. First, I have to admit that this talk made some pieces fall into place for me. My ambition in regards to developer testing is to raise the bar in the industry. I don’t want us to wonder about how many unit tests we need to write or how we should name them. Mocks and stubs should be used appropriately, and testability should be in every developer’s autonomic nervous system. But why? And here’s the eye opener: Because we’ll need to be solving harder problems in a few years (if not today already). Instead of, or more likely in addition to, finding simple boundary values to avoid off-by-one errors, we’ll also need to handle the twins using voice authorization to login to our software. Needless to say, we shouldn’t spend too much of our mental juice writing simple unit tests and alike.

That being said, we can’t abandon the bottom layer of the pyramid. Imagine handling strange AI-induced edge cases in a codebase that isn’t properly crafted for testability and tested to some degree. It would probably be the equivalent of adding unit tests to poorly designed code or even worse. Yes, monitoring will probably play a greater part in the software of tomorrow, but isn’t it just another facet of observability?

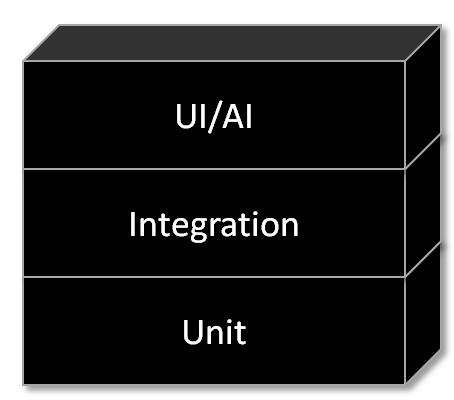

So, what will probably happen next is that the top of the testing pyramid will grow thicker, maybe like this (couldn’t resist the “AI”):