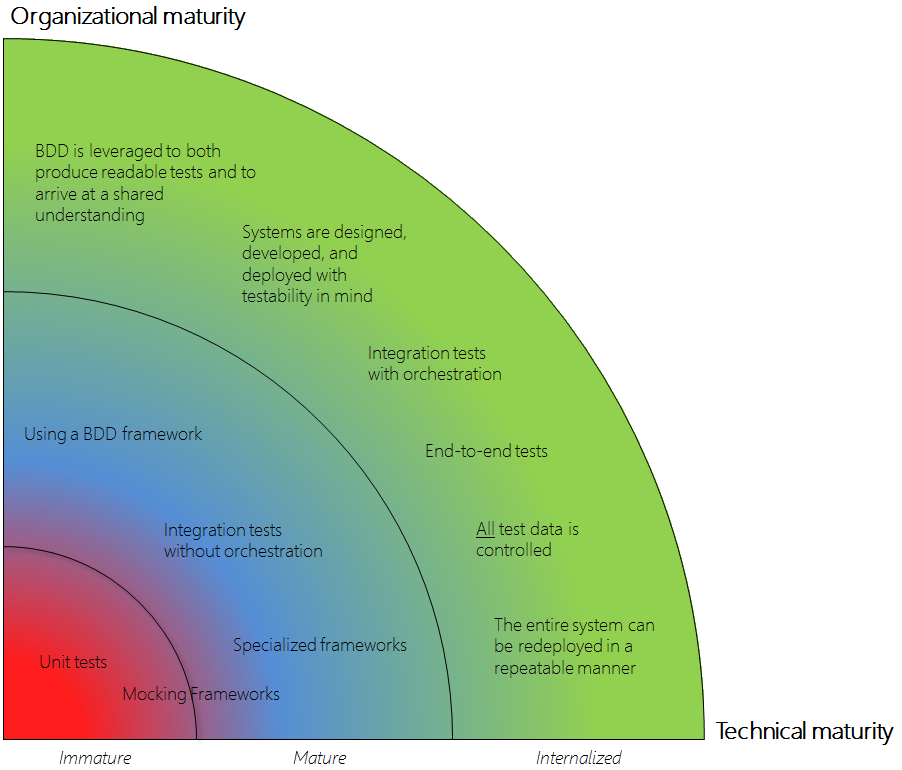

I’ve been talking about this topic for years, and have been asked questions about it while giving presentations on developer testing. Still, I’ve been unable to articulate fully what I mean by the “Developer Testing Maturity Curve”. Many wanted to know, because it’s tempting to rank your organization once there’s something to rank against.

After organizing my thoughts for a while I’ve finally come up with a model that matches my experience of how developer testing tends to get implemented across different organizations. Just a caveat: I may revise this model if I learn more or have an epiphany, but this is what it looks like today.

Graph Axis

The horizontal axis is technical maturity. It’s the knowledge and understanding of various tools and techniques. I consider employing a unit testing framework “immature”, which means that you need relatively little technical skill to author some simple unit tests. Conversely, implementing an infrastructure that enables repeatable, automated end-to-end tests would be on the other side of the scale.

The vertical axis is more elusive. In the image, I call it “organizational maturity”, but in reality, it means several things:

- Understanding that developer testing (any automated testing, in fact) must be allowed to take time

- Acknowledging testability as a primary quality attribute and designing the systems accordingly

- Willingness to refactor legacy code to make it testable

- Time and motivation to clean up test data to make it work for you, not against you

- Dedicating time and resources to cleaning up any other old sins that prevent from harnessing the power of developer testing and automated checking, be they related to infrastructure, architecture, or the development process in general

You should get the idea… If you’re in an organization that has internalized the above, you won’t be throwing quality and (developer) testing out the window as soon as there’s a slightest risk of not meeting a deadline.

The Maturity Zones

The hard part of this model was to place the individual practices in the different zones. Fortunately, in my experience, technical and organizational maturities seem to go hand in hand. By that, I mean that I haven’t seen an organization with superior technical maturity that would totally neglect the organizational climate needed to sustain the technical practices, and vice versa. After having made this discovery, placing the individual practices became easier. Next, I’ll describe what they are.

Immature

Unit tests

Having only unit tests is immature in my opinion. True, certain systems can run solely on unit tests, but they are the exception. If you only do unit tests, you probably have no way of dealing with integration and realistic test data. From an organizational point of view, having only unit tests means that developers write them because they must, not because they see any value in them. Had this been the case, they’d engage in other developer testing activities as well.

Mocking frameworks

This is the only artifact on the border. Let me explain. A mature way of employing a mocking framework assumes that you understand how indirect input and output affects your design and its testability, and then use the framework to produce the correct type of test double. The immature approach is to call all test doubles “mocks” and use the framework because everyone else seems to.

Mature

Specialized frameworks

These are frameworks that help you solve a specific testing problem. They may be employable at unit test level, but they’re most frequent (and arguably useful) for integration tests. Examples? QuickCheck, Selenium WebDriver, RestAssured, Code Contracts. Some of these frameworks may be entire topics and areas of competence in themselves. Therefore, I consider it mature to make use of them.

Integration tests without orchestration

What does “without orchestration” mean? It means that the framework you’re using does everything for you and that you don’t need to write any code to start components or set them to a certain state. I’m thinking about frameworks like WireMock, Dumbster, or Spring’s test facilities.

Using a BDD framework

You can use a BDD/ATDD framework to launch tests, hopefully the complex ones. This requires its infrastructure and training, so I consider it mature (barely). In this sector, you’re not reaping the full benefits of BDD, just using the tools.

Internalized

Leveraging BDD

In contrast to the immature case, where the BDD framework is just a tool, in this sector the organization understands that BDD is about shared understanding and a common vocabulary. Furthermore, various stakeholders are involved in creating specifications together, and they use concrete examples to do so.

Testability as primary a quality attribute

Testability—controllability, observability, smallness—applies at all levels of system and code design. Organizations that have internalized this practice ensure that all new code and refactored old code take it into account. When it comes to designing code, it’s mostly about ensuring that it’s testable at the unit level and that replacing dependencies with test doubles is easy and natural. At the architectural level it’s about designing the systems so that they can be observed and controlled (and kept small), and that any COTS that enters the organization isn’t just a black box. The same goes for services operated by partners and SaaS solutions.

Integration tests with orchestration

There’s a fine line between such tests and end-to-end tests. The demarcation line, albeit a bit subjective, is the scope of the test. An integration tests with orchestration targets two components/services/systems, but it can’t rely solely on a specialized framework to set them up. It needs to do some heavy lifting.

End-to-end tests

Anything goes here! These tests will start up services and servers, populate databases with data and simulate a user’s interaction with this system. Doing this consistently and in a repeatable fashion is a clear indication of internalized developer testing practices.

All test data is controlled

This is true for a majority of systems: The more complex the tests, the more complex the test data. At some point your tests will most likely not be able to rely on specific entries in the database (“the standard customer”) and you’ll need to implement a layer that creates test data with specific properties on the fly before each test. If you can do this for all test data, I’d say you’ve internalized this practice.

The entire system can be redeployed in a repeatable manner

If you have your end-to-end tests in place, you most likely have ticked off this practice. If not, there’s some work to do. A repeatable deployment usually requires a bit of provisioning, a pinch of database versioning, and a grain of container/server tinkering. Irrespective of the exact composition of your stack, you want to be able to deploy at will. Why is this important from a developer testing perspective? Because it implies controllability, as all moving parts of the system are understood.

And the point is?

Congratulations on making it this far. What actions can you take now? If you really want to gauge your maturity level, please do so. My advice is that you map the areas in the maturity curve to your organization’s/team’s architecture and practices, and start thinking about where to start digging and in what order.

Excellent post Alex, I especially like your references to Testability which is often overlooked.

Thanks! You’ve encouraged me to write more about it and start a brand new section on vocabulary.

I need you to do a brown bag where I work.

“End-to-end tests

Anything goes here! These tests will start up services and servers, populate databases with data and simulate a user’s interaction with this system. Doing this consistently and in a repeatable fashion is a clear indication of internalized developer testing practices.”

But E2E tests are brittle and expensive!

It seems like you’re encouraging to write many E2E tests

Thanks for helping me to clarify this one. I don’t consider “consistent and repeatable” to equal “many”. Given all the caveats above, my opinion is that teams that are truly good skilled in developer testing will partly be able to mitigate the disadvantages, and partly just know when not to shy away from end to end tests. My default number for an “average” system would be 10-30 well-designed end-to-end tests.